预计阅读时间: 13 分钟

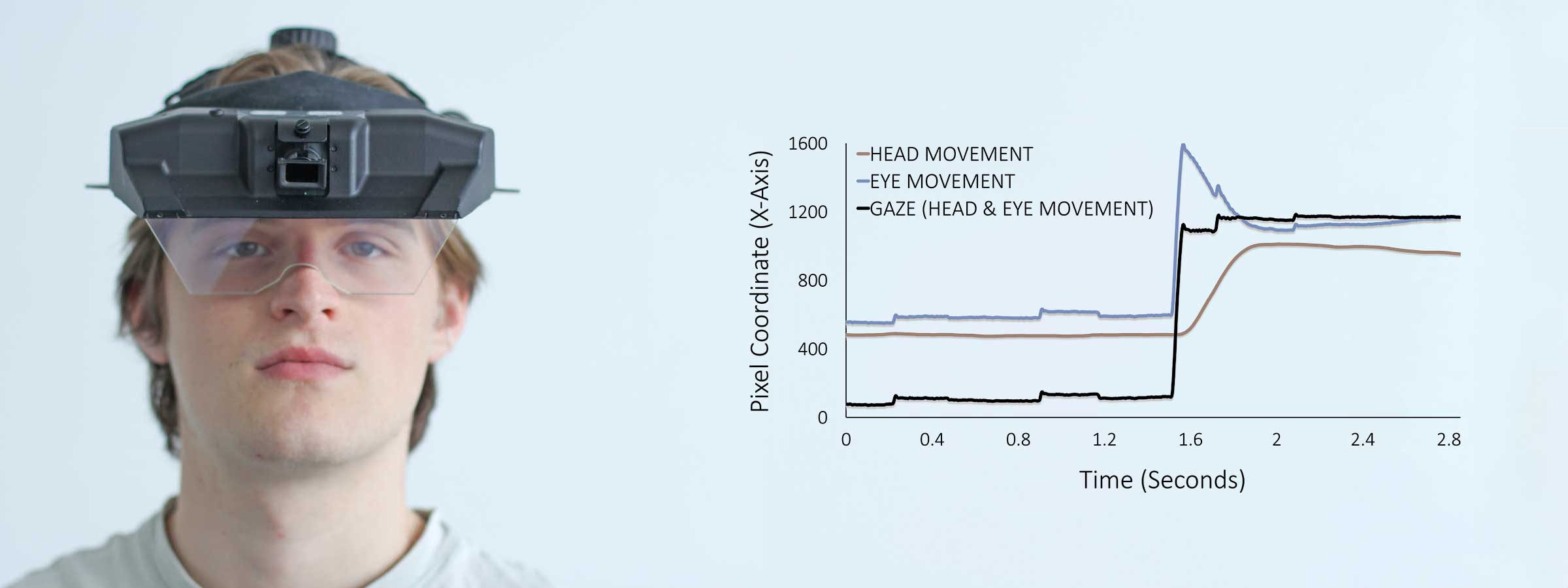

EyeLink 3以高达1000 Hz的频率同时跟踪眼睛和头部运动。标准的六自由度(6DOF)头部数据提供X、Y和Z坐标(单位为mm)以及横滚、俯仰和偏航角度。有关EyeLink 3头部位置和旋转数据的信息,请访问我们全面的 关于6DOF头部跟踪的博客。

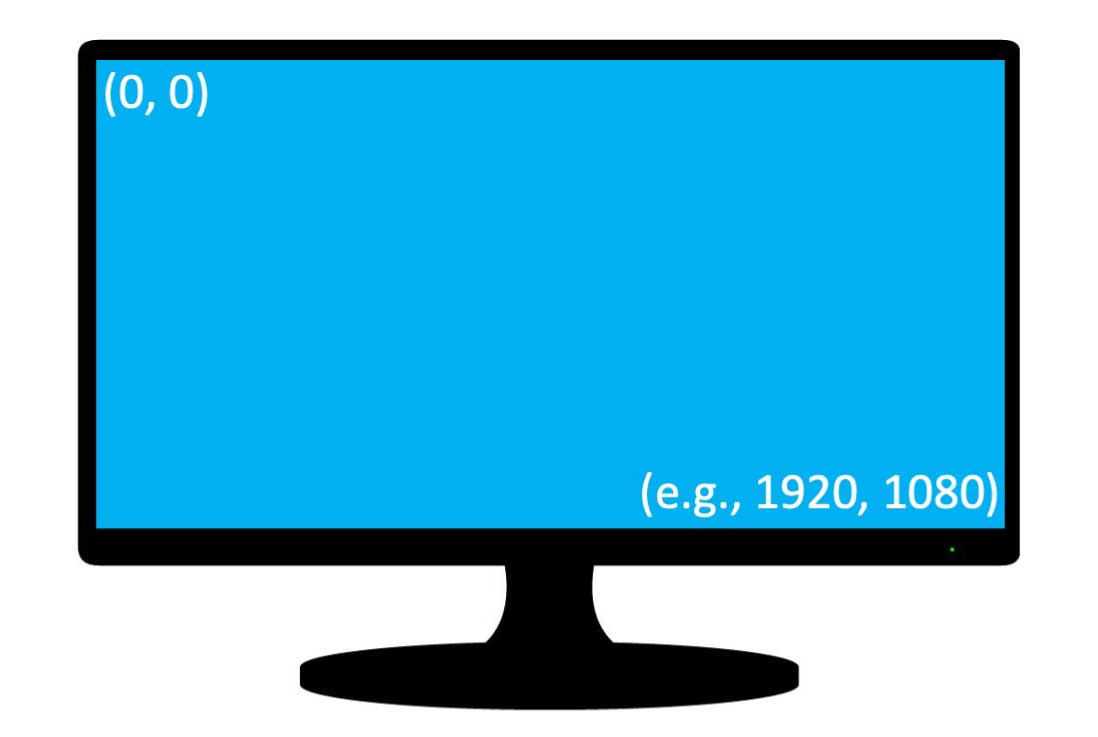

Like all EyeLink systems, the EyeLink 3 delivers precise gaze data in screen pixel coordinates, where (0, 0) marks the top-left corner and the bottom-right reflects the full screen resolution (e.g., 1920×1080). In addition to standard gaze data, and 6DOF head pose data, the EyeLink 3 also provides separate head and eye-in-head positions in screen pixel coordinates. This innovative feature allows for a simple and clear analysis of the relative contributions of both head and eye movements to shifts in gaze. In this blog we explain the three pixel-based coordinates (gaze, head 和 eye) that the EyeLink 3 provides.

图1:眼动仪数据的(X,Y)屏幕坐标,其中(0,0)表示左上角和右下角,取决于像素分辨率(例如19201080)。

凝视

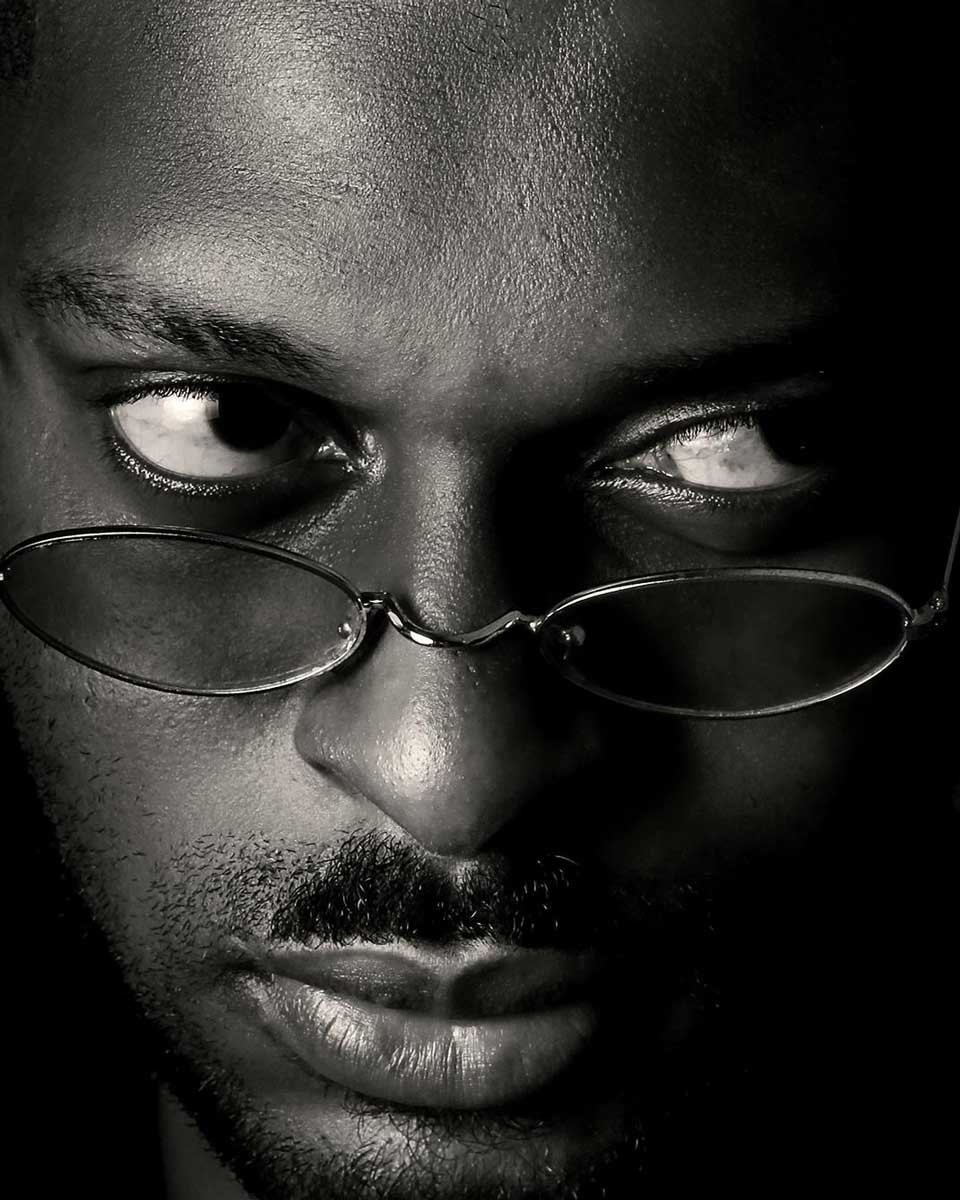

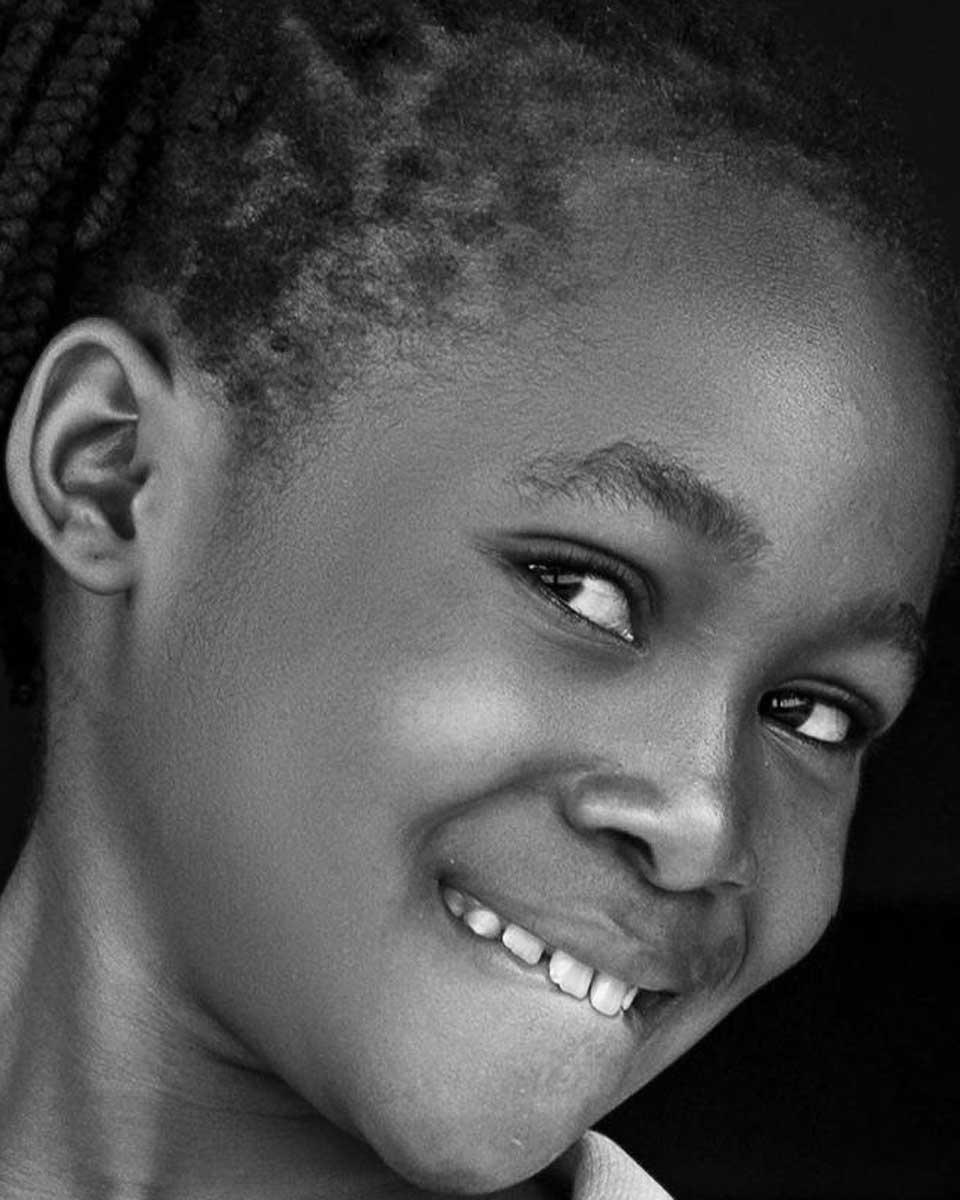

在眼动追踪研究中,“凝视”是指屏幕上的关注点,反映了头部姿势(其位置和方向)和头部内的眼睛旋转。下面,这些图像说明了头部和眼睛运动对凝视的影响。第一张照片中,人直视着相机,显示了头部没有移动也没有眼睛旋转的基本位置。第二张照片仅显示了在向左移动头部后对模特左侧的凝视,而第三张照片也捕捉到了向左的凝视,但这次只是眼睛旋转的结果。最后,最后一张图片传达了头部向左移动和眼睛向右旋转后的中心注视位置。

图2:自然肖像中的凝视,反映了头部和眼睛的运动组合。

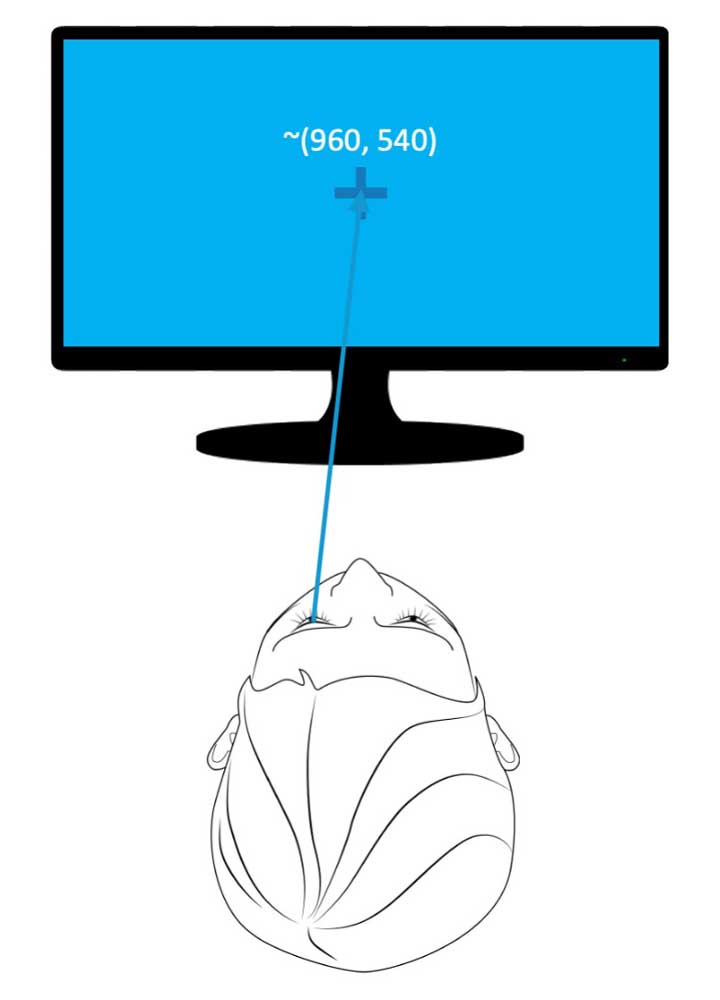

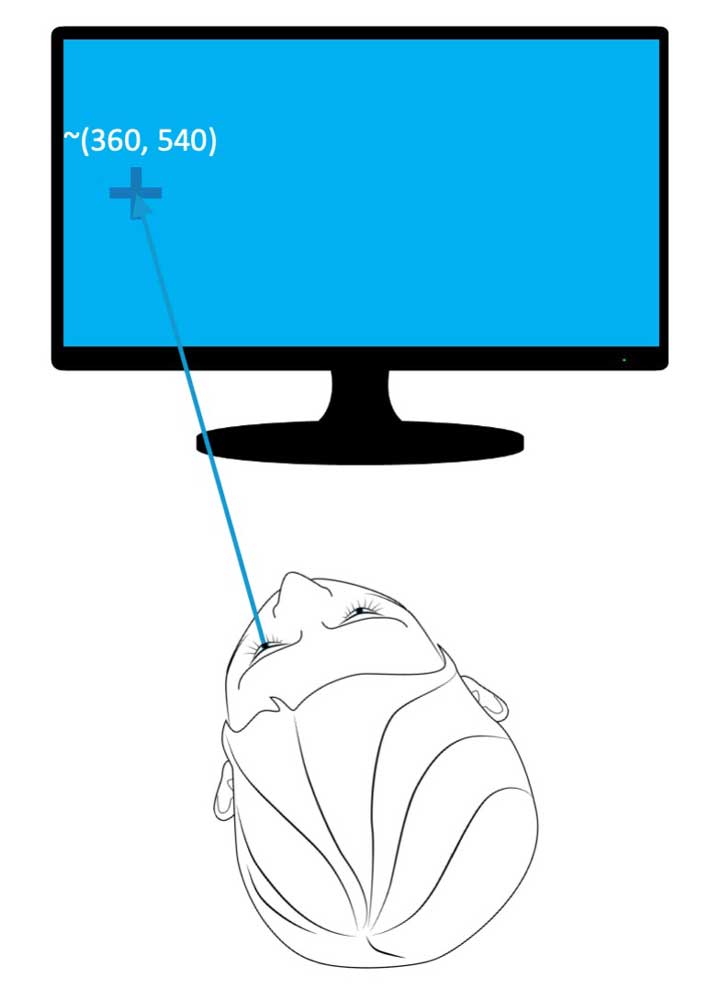

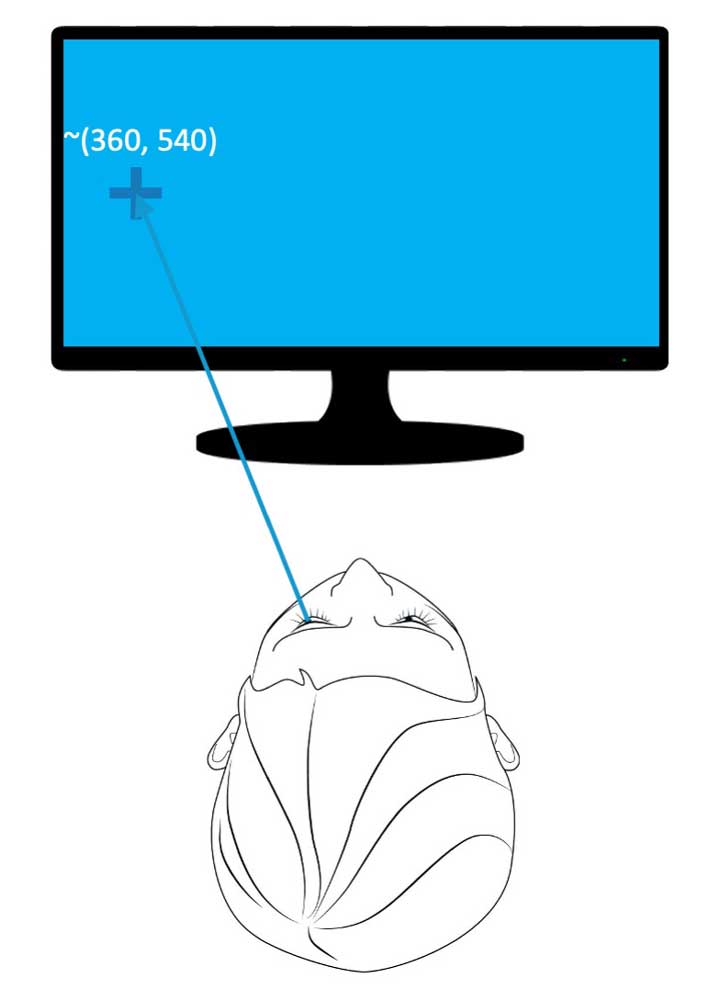

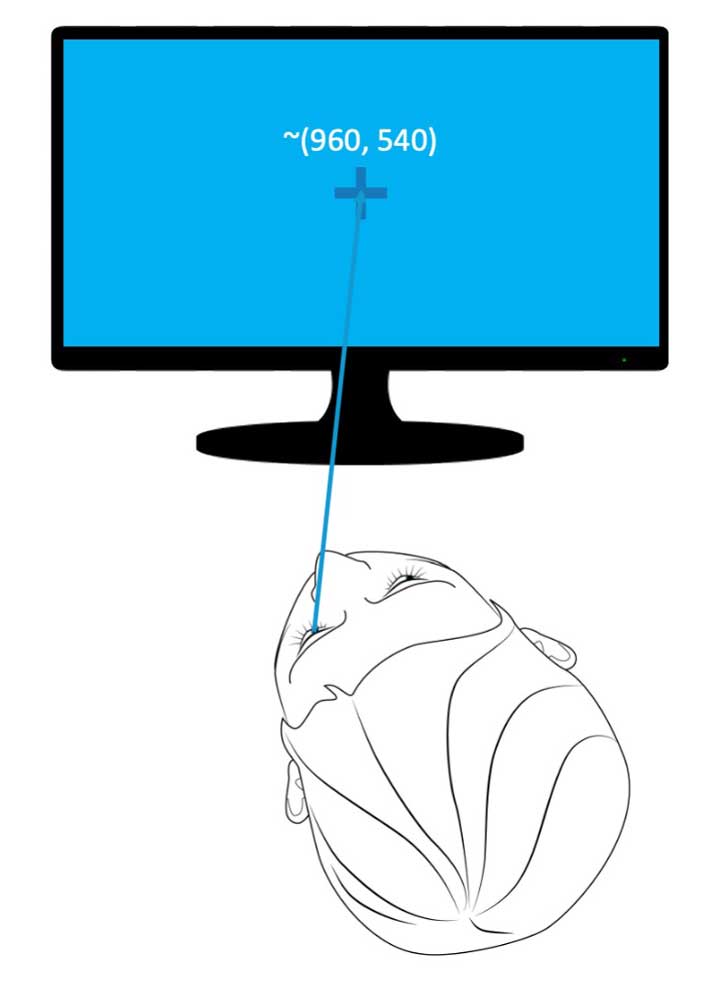

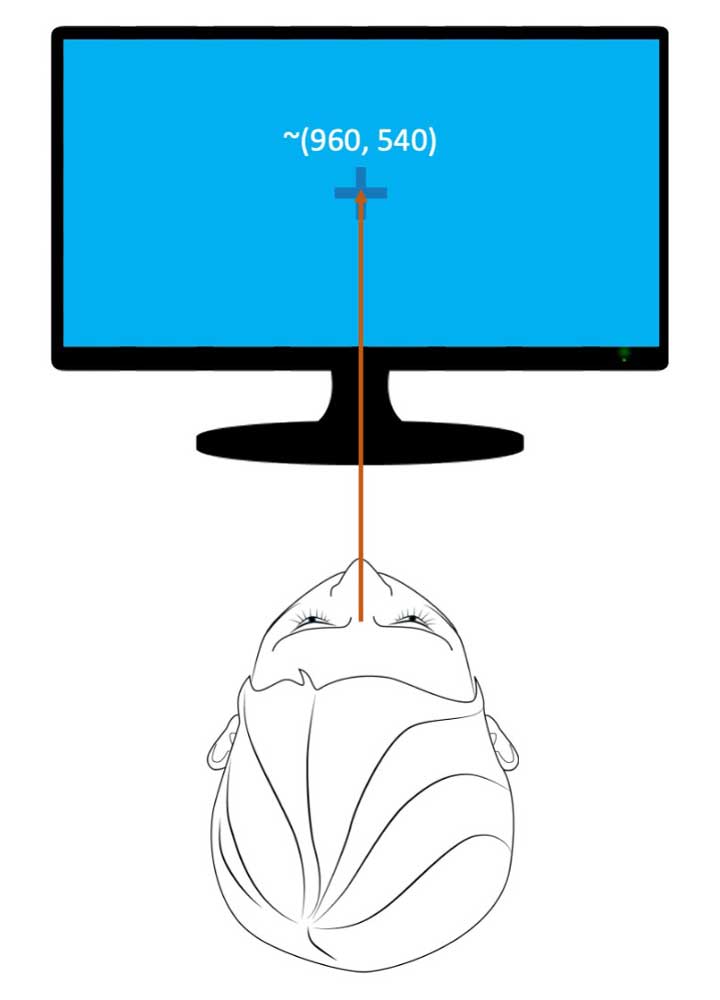

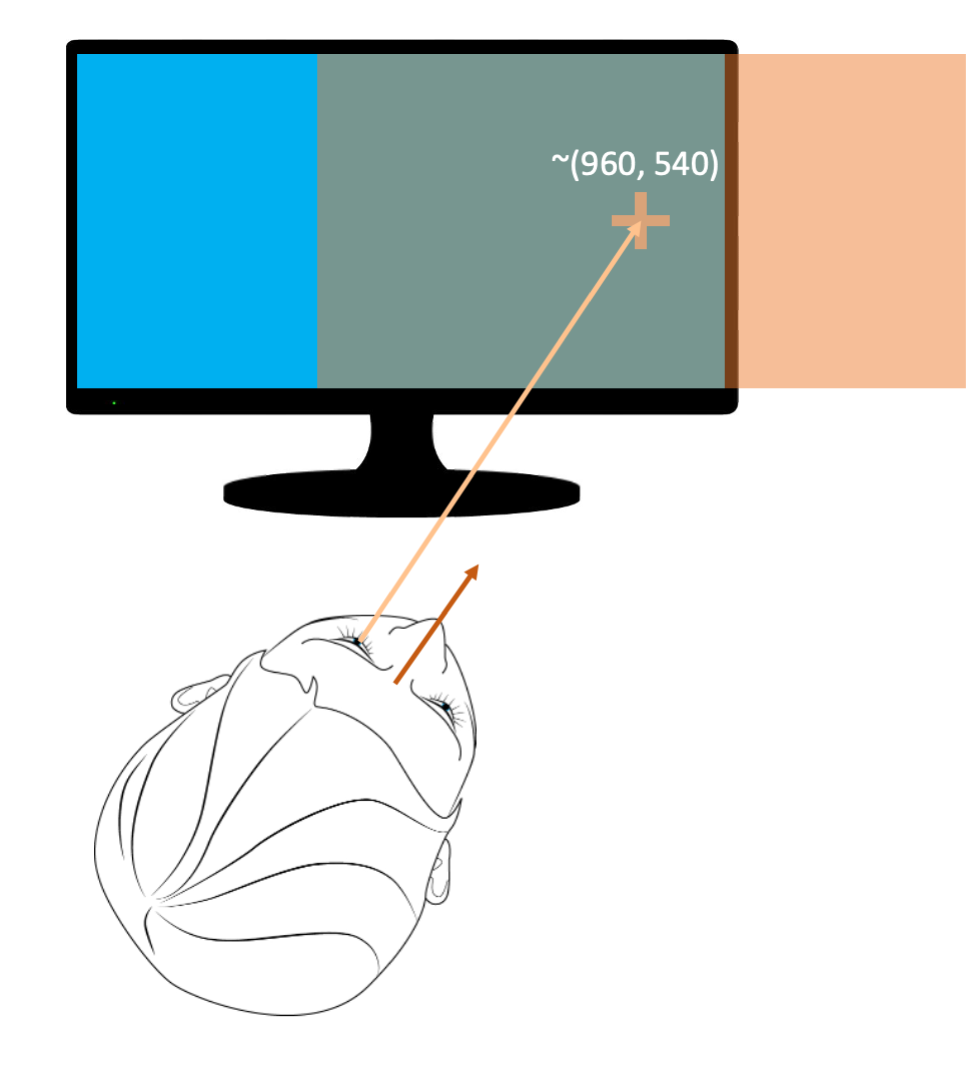

为了在实验环境中捕捉屏幕上的注视坐标,想象一个虚拟杆从眼睛后部延伸,穿过瞳孔,朝向屏幕。此杆与屏幕相交的点(或像素坐标)是凝视坐标。在下面的第一个例子中,假设屏幕为1920×1080像素,凝视值将接近(960540),因为人正注视着屏幕中心的十字架。接下来的两幅图像显示了大约(360540)的注视像素值。在第二图像中,视线向屏幕左侧的移动仅由头部移动驱动,而在第三图像中,仅由眼睛旋转驱动。最后,最后一张图像再次显示了中心注视(960540),但这次,凝视位置反映了向左头部移动和向右眼睛旋转的组合。所有注视坐标都反映了眼睛在头部的角度和头部本身的角度。大多数传统的眼动追踪实验将基于凝视数据进行分析。

图3:凝视随着头部和眼睛的运动而变化。要在屏幕上捕捉基于凝视像素的坐标,想象一个虚拟杆从眼睛后部延伸,穿过瞳孔,朝向屏幕。杆接触的像素提供注视坐标。

校长

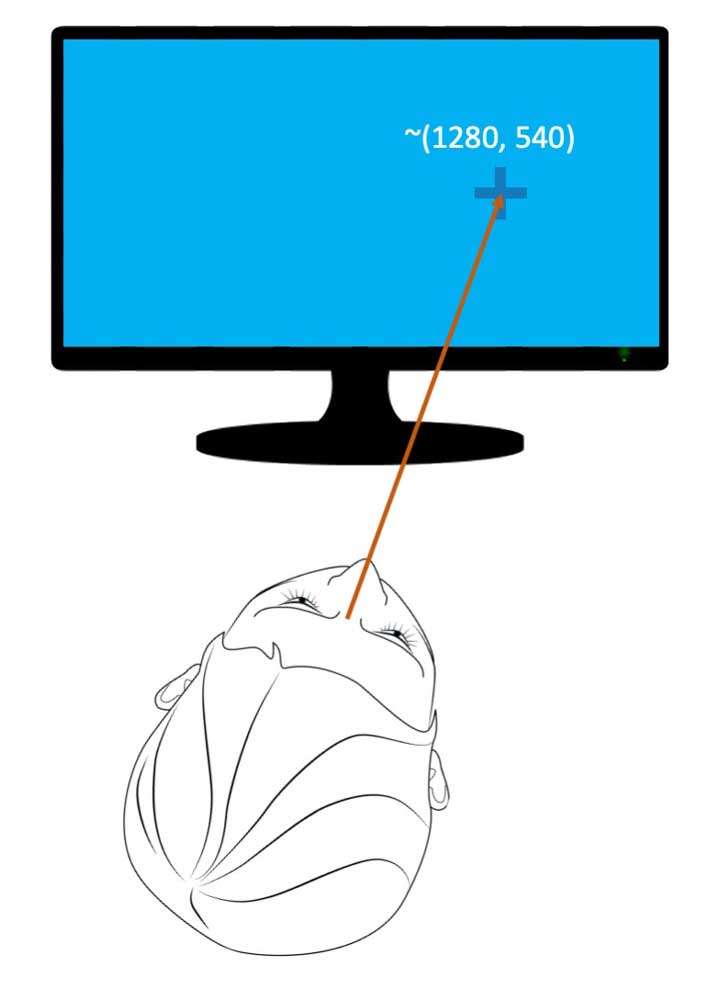

除了标准6DOF头部姿态数据,也可以在屏幕像素坐标中提供参与者头部姿态的指示。对于基于头部像素的坐标,想象一个从参与者眼睛之间向前延伸到屏幕/校准平面的虚拟杆。杆与屏幕相交的点提供了头部坐标。例如,在下面的第一幅图像中,再次假设1920×1080像素的屏幕,基于头部像素坐标大约为(960540),因为人的头部指向屏幕的中心。在下一幅图中,基于头部像素的坐标大约是(1280540),因为头部向右移动。与凝视数据一样,EyeLink 3提供的基于头部像素的新型坐标数据可能是负的(如果虚拟杆与屏幕上方或左侧的校准平面相交),也可能超过监视器的像素分辨率(如果虚拟棒与监视器右侧或下方的标准平面相交)。

图4:要在屏幕上捕获基于头部像素的坐标,想象一个从头部中心向屏幕延伸的虚拟杆。杆接触的像素提供了头部坐标。

眼睛

基于眼睛像素的坐标是EyeLink 3独有的另一个新颖概念。它代表了眼睛旋转对凝视的贡献。从概念上讲,它可以比作使用带有头部支撑的桌面EyeLink眼动追踪系统——当头部固定时,对凝视的唯一贡献来自眼球运动。然而,如果头部没有受到约束,头部和眼睛的运动通常都有助于凝视。基于眼睛像素的坐标允许确定眼睛旋转对凝视位置的相对贡献。

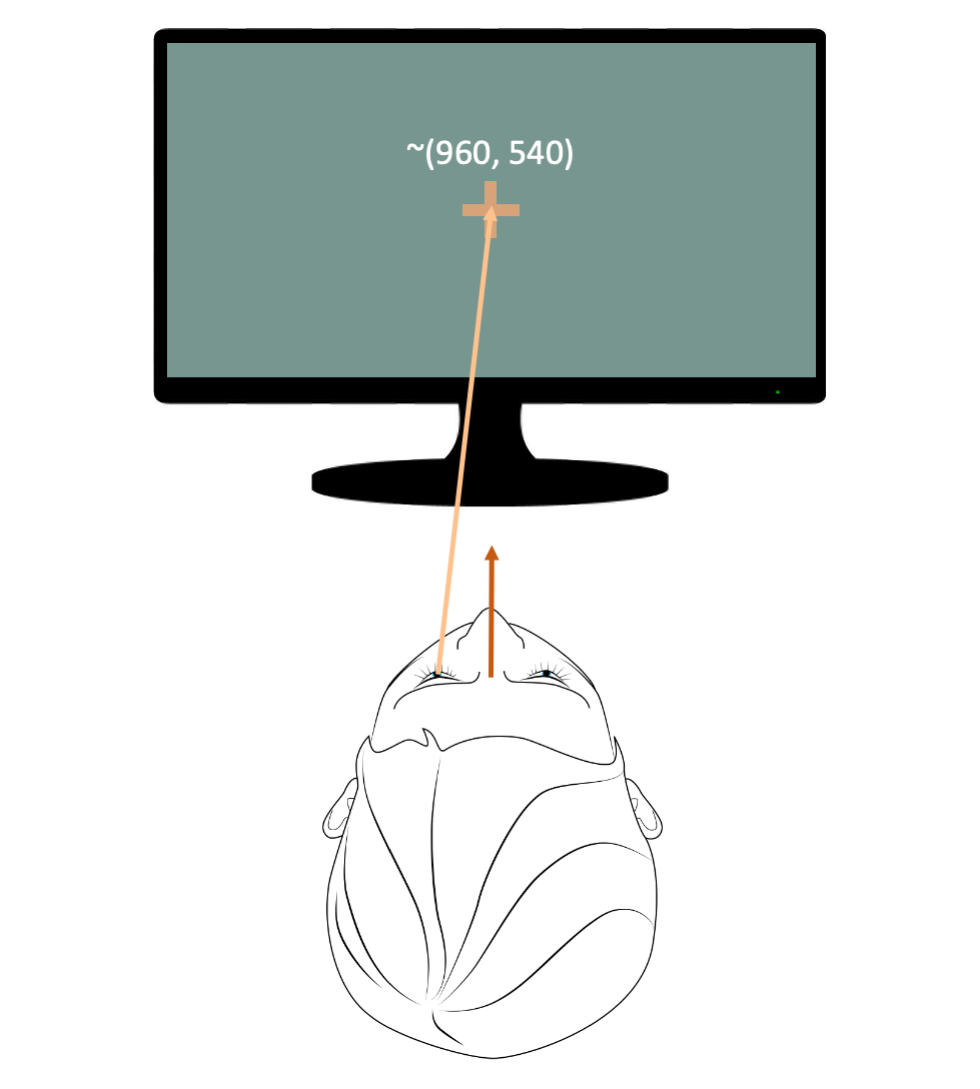

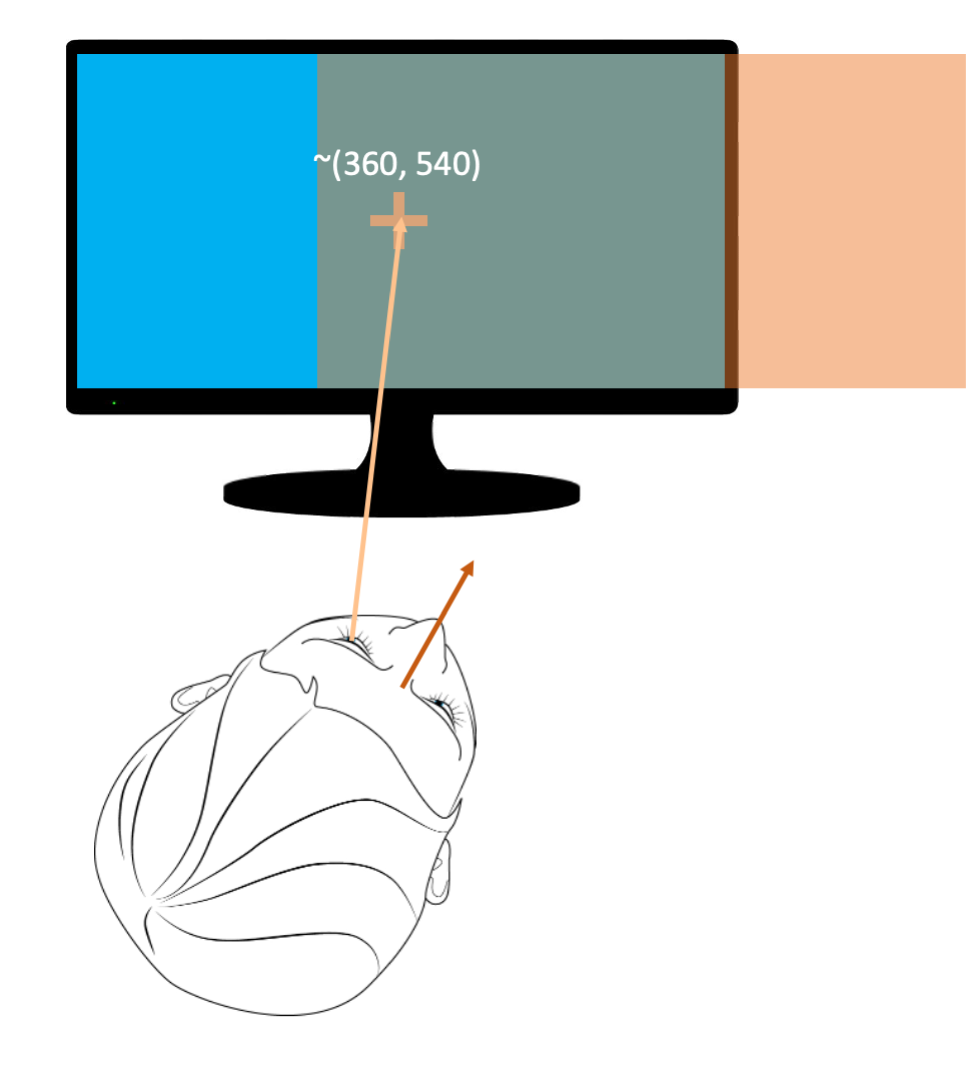

基于眼睛像素的坐标度量表示眼睛在头部内的旋转。想象一个虚拟屏幕,其大小与物理屏幕完全相同,并且与参与者的头部相连。换句话说,这个屏幕随着人的头部移动——看到橙色的屏幕随着下面的头部移动。请注意,在下图中,虚拟屏幕仅沿X轴移动,但虚拟屏幕也可以沿Y轴移动。

图5:要捕获基于眼睛像素的坐标,首先想象一个与头部相连的虚拟屏幕沿X轴和Y轴移动。(注意,该图仅显示了沿X轴的移动。)基于眼睛像素的坐标是此虚拟屏幕上的注视位置。

基于眼睛像素的坐标是虚拟屏幕上的注视位置(与实际屏幕共享相同的像素分辨率)。例如,下面的左图显示了一个人直视实际屏幕,导致凝视和头坐标都位于实际屏幕的中心(960540)。因为虚拟屏幕与实际屏幕位于同一位置,所以(虚拟屏幕上的)眼睛坐标也是(960540)。在第二幅图像中,头部向右旋转,但参与者继续直视实际屏幕右侧的一个点。当虚拟屏幕与头部一起旋转时,虚拟屏幕上的眼睛坐标为(960540),反映了头部向右旋转的事实,但眼睛继续直视前方——换句话说,眼睛在头部内没有旋转。最终的图像显示,该人的头部向右旋转,但仍固定在实际屏幕中心的十字架上。这涉及眼睛向左反向旋转。眼睛的向左反向旋转反映在眼睛坐标中,眼睛坐标现在位于虚拟屏幕的左侧(大约360540)。

图6:基于眼睛像素的坐标位于虚拟屏幕上,其大小与物理屏幕完全相同,并随头部旋转。

凝视、头部和眼睛数据

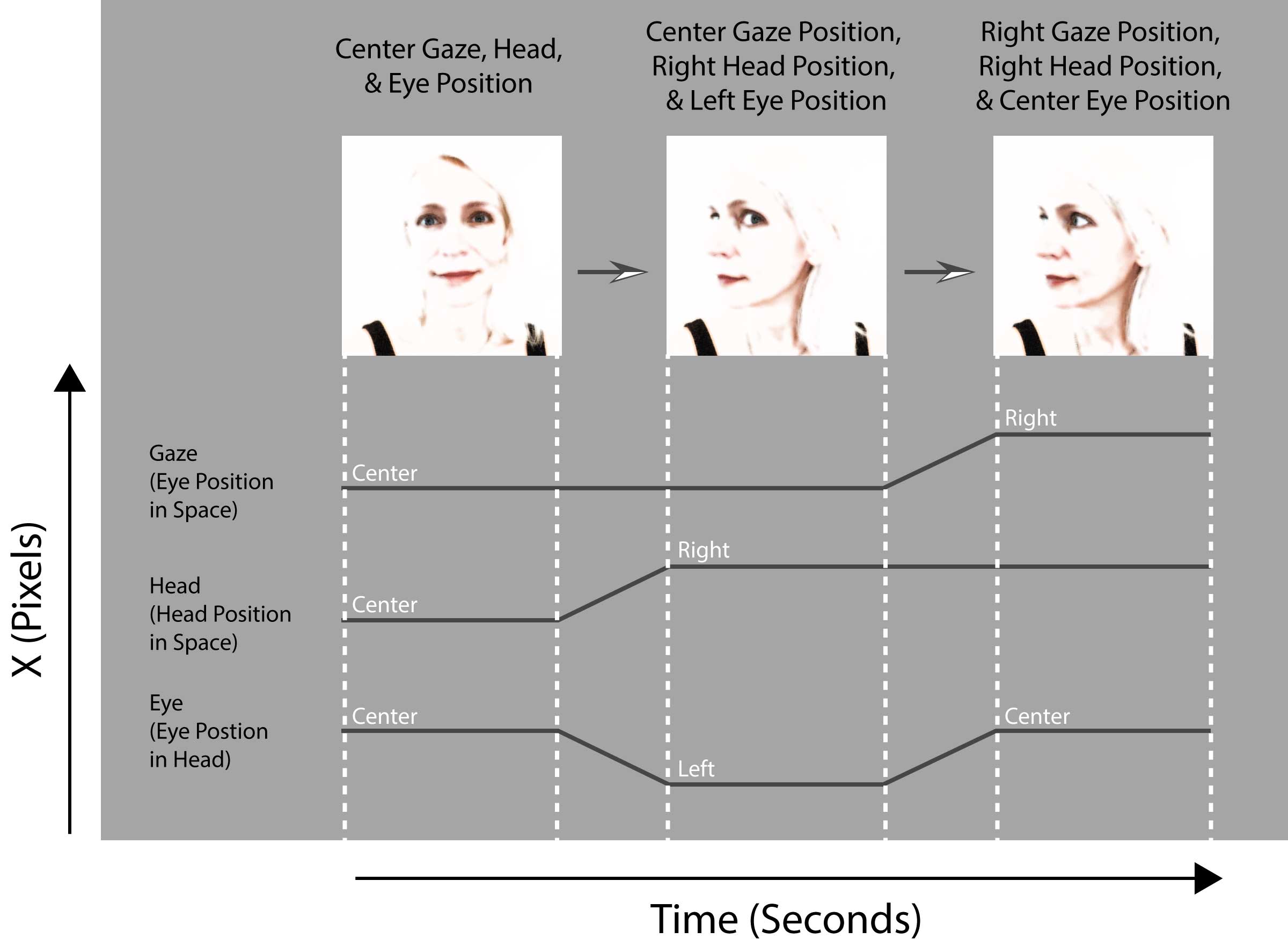

下面的视觉表示说明了基于凝视、头部和眼睛像素的数据是如何变化的(沿X轴)。左图描绘了一个人直视屏幕中央。凝视、头部和眼睛位置的坐标是相同的。中间的图像描绘了一个头部转向参与者的右侧,但目光固定在屏幕中心。从绘制的数据中可以看出,视线仍然以与左图像相同的屏幕坐标为中心。但是,头部旋转向右,眼睛旋转向左(以保持物理屏幕上的中心固定)。最后,右图描绘了头部转向右侧,参与者直视前方。因为眼睛没有反向旋转(眼睛坐标没有变化),这会导致视线向右移动。

图7:视线、头部姿势和眼球运动沿X轴的基于像素的坐标。左图显示一个人正盯着中心,导致相同的凝视、头部和眼睛基于像素的坐标。中间的图片显示,头部向右旋转,眼睛向左旋转以保持中心注视。右图显示了视线和头部向右,眼睛位置在头部的中心,没有移动。

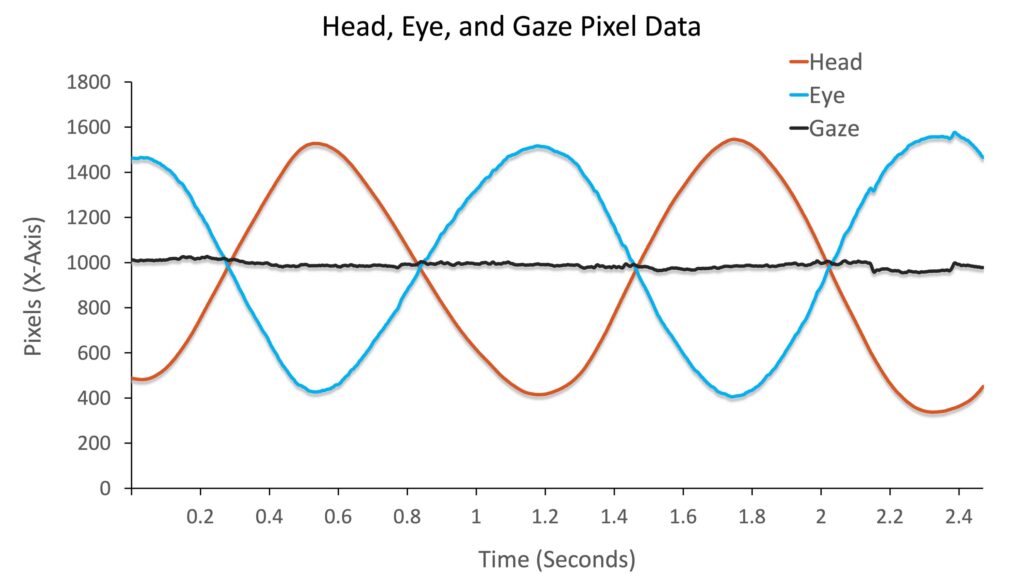

To view the complete dissociation between head, eye, and gaze data across time, the plot below illustrates the three pixel-based data types as a participant makes “yaw” head rotations (i.e., shakes head back and forth) whilst maintaining fixation on a central target. The gaze data remains unchanged, approximately fixed at the center of the screen along the X-axis, but the head and eye data change in opposite directions – as the eye counter-rotates within the head to maintain the fixation.

图8:沿X轴的基于像素的坐标,同时头部和眼睛沿相反方向移动以保持中心固定。

结论

除了提供完整的6DOF头部位置和旋转数据以及基于像素的凝视数据,EyeLink 3眼动仪还提供了新的基于头部和眼睛像素的坐标。这些数据为确定头部和眼睛运动对传统眼动追踪任务(如视觉搜索、场景感知、阅读、前向行走和平稳追踪)中凝视变化的相对贡献提供了一种简单方便的解决方案。所有数据都记录在记录的数据文件中,并实时流式传输到刺激显示PC。这为开发新任务开辟了可能性,包括头部或眼睛相关任务。

联系

如果您想了解有关EyeLink 3的更多信息,可以致电我们(+1-866-821-0731或+1-613-271-8686),或点击下面的按钮发送电子邮件:

头部追踪

头部追踪